How to block malicious bots? Secure your website

Implementing appropriate protective measures can effectively safeguard websites from attacks and data breaches. This article explains the definition and the harm caused by malicious bots. People can use methods such as CAPTCHA, traffic analysis, IP blocking, and more, to block bots.

What Are Bots?

Bots are programs designed to automatically perform specific tasks, often mimicking human behavior.

They can perform various tasks on the Internet. Examples include data scraping, information retrieval, and automated interactions. Some robots help improve efficiency and automate processes. But others are used maliciously, leading to security risks.

What Are Good Bots?

A good robot will not damage the network environment. A robot is an automated program tool that can perform tasks according to the designer’s requirements. It improves people’s work efficiency.

Good bots can help companies improve efficiency. For example, robots can automatically respond to user questions and monitor the health of the system. Robots can also crawl web data.

What Are Bad Bots?

Bad bots are automated programs designed for malicious purposes, aiming to perform unethical or illegal activities. They may disguise themselves as legitimate users and overwhelm websites with automated requests, steal data, or launch cyberattacks. Common examples of malicious bots include web crawlers, botnets, and spam senders.

Types of Malicious Bots

Common types of malicious bots include:

Crawler Bots: Automatically scrape large amounts of data from websites, which can overload servers and crash websites.

DDoS Bots: Launch Distributed Denial of Service (DDoS) attacks to take websites offline.

Spam Bots: Flood email inboxes with massive amounts of spam.

Identity Theft Bots: Attempt to steal account information and personal data by mimicking user behavior.

Malicious Ad Bots: Manipulate ad revenue by generating fake clicks, deceiving advertisers.

The Harmful Effects of Malicious Bots

Malicious bots can cause various problems, such as:

Decreased Website Performance: Malicious bots consume server resources with excessive requests, leading to slow loading times or even crashes.

Data Breaches: Some bots scrape unauthorized data, stealing sensitive information like user data or intellectual property.

Financial Loss: Spam bots may ruin email marketing campaigns, harming revenue generation.

Damaged Brand Reputation: DDoS attacks or fake traffic generation can damage a company’s image, leading to a loss of user trust.

Related Data

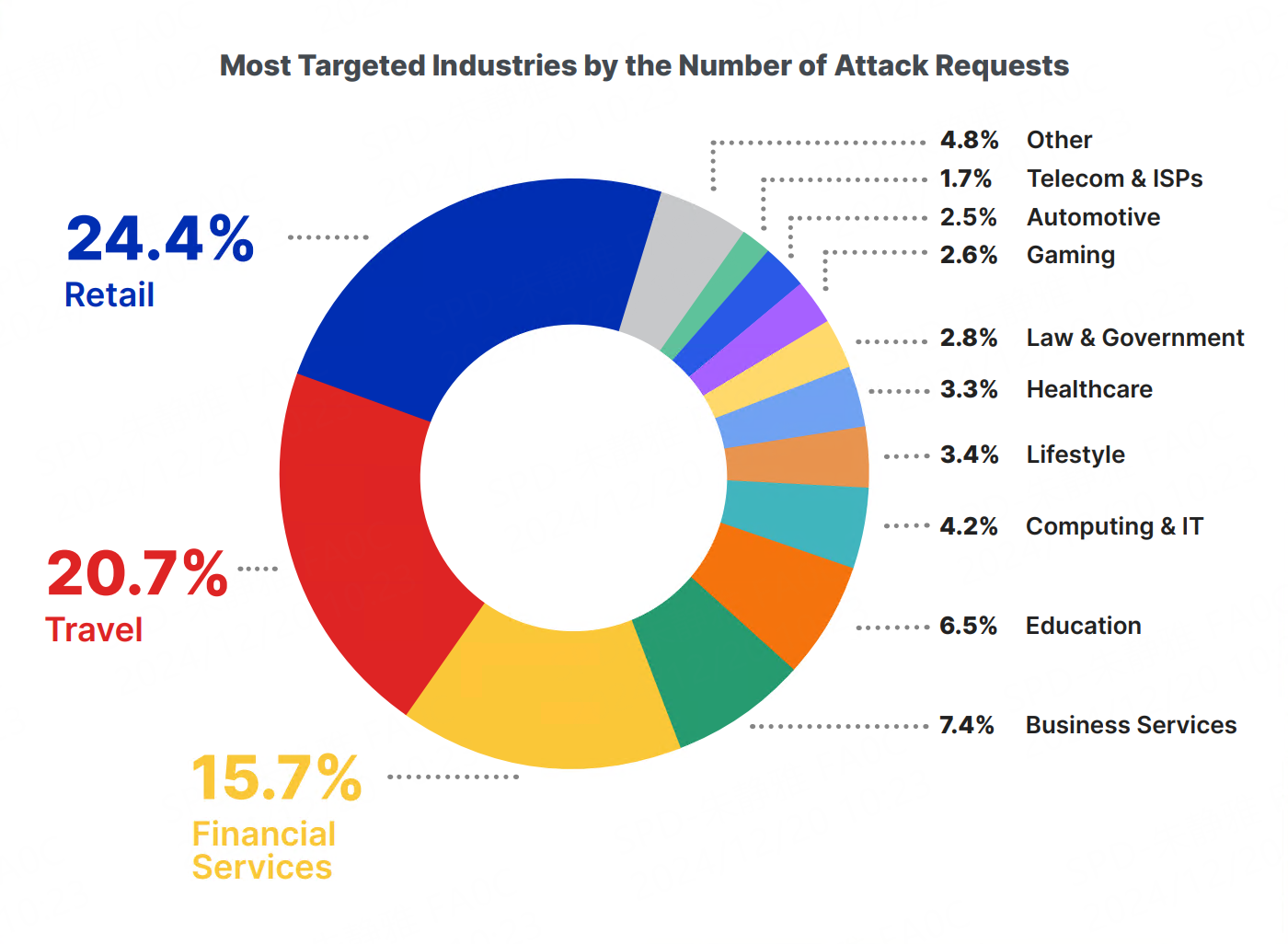

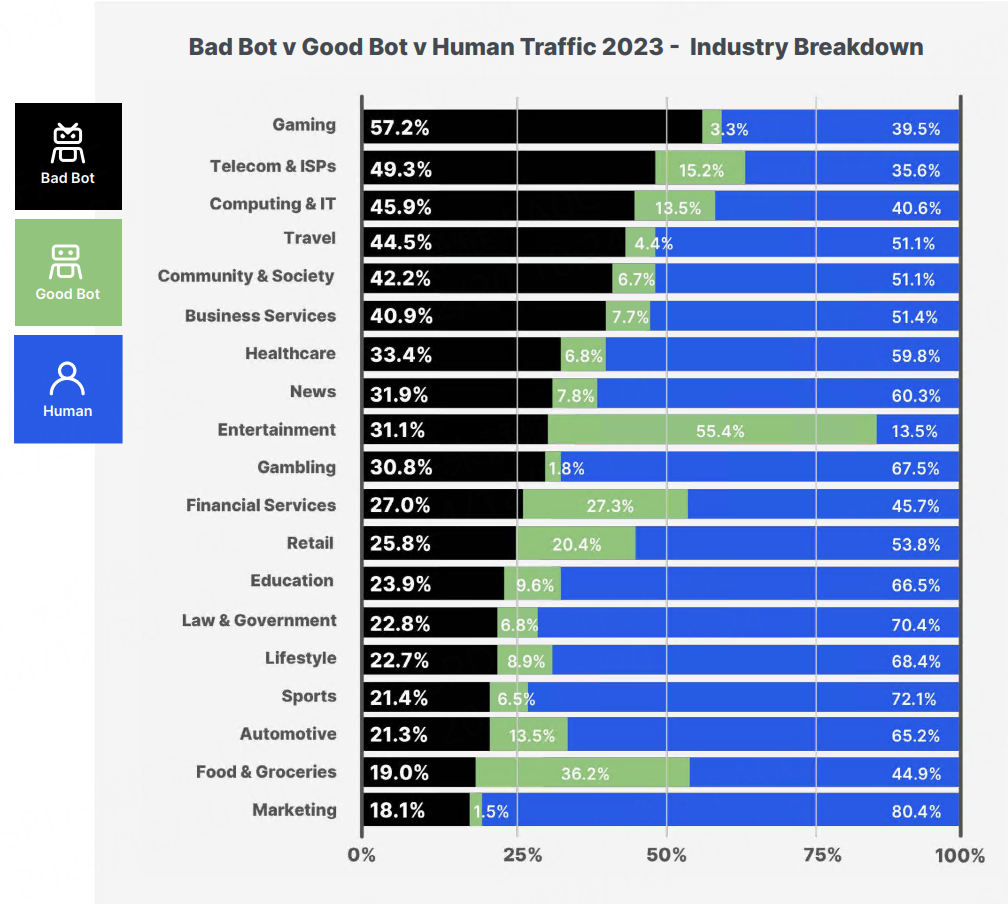

According to the 2024 Imperva Threat Research Report, the retail, travel, and finance sectors are frequently targeted by malicious bot attacks.

Malicious bot attacks are on the rise.

The 2024 report shows that the number of malicious bot attacks is on the rise, especially in key industries. As network technology continues to develop, attackers are using more advanced automation tools to bypass risks. Attackers use bots to crawl data and account information on a large scale. Causing financial damage to people’s websites.

Urgency of Protective Measures

In response to these threats, more businesses are increasing investments in bot management and protection technologies.

Why Monitoring Website Traffic Is Important

Monitoring website traffic is crucial.

Timely detection of abnormal traffic changes can effectively identify malicious robot activities. Continuous monitoring of traffic data can help you better detect abnormal data. When you identify such abnormal traffic consumption, you can take proactive measures to prevent potential attacks and data leaks. Thus, achieving the security and stability of your website.

When your website detects a large number of requests from the same IP address in a short period of time,you should realize that you may be attacked by malicious robots.

How Do I Block Bot Attacks?

Create a robots.txt File

This is an important text file located in the root directory of your website. It tells search engine crawlers (like Googlebot, Bingbot, etc.) which pages they can and cannot access. This file doesn’t directly block malicious bots but informs legitimate crawlers what to access and what not to.

Use CAPTCHA

Implement CAPTCHA to differentiate between real users and bots.

Implementing CAPTCHA is a reliable method to defend against bot attacks. It prompts users to perform straightforward tasks, such as recognizing distorted characters or selecting specific images, to differentiate humans from automated systems.

Integrate CAPTCHA into registration and login forms. Doing so can effectively prevent malicious robots from performing tasks. It can prevent malicious robots from creating fake accounts and avoid brute force attacks.

Because CAPTCHA is usually only a real human user who can complete these verification code tasks. This prevents the automated program operation of malicious robots.

Use HTTP Authentication

Require a username and password to access parts of your website.

HTTP Authentication is an efficient method to restrict bots from accessing specific sections of your website. By requiring users to enter a username and password, it ensures that only authorized individuals gain access to protected pages or resources.

This straightforward yet effective approach not only blocks unwanted bot traffic but also secures sensitive areas of your site. Setting up HTTP Authentication is relatively simple and doesn’t necessitate extensive modifications to your site’s code. It offers a practical way to safeguard confidential features or information.

However, this strategy has disadvantages. Since it requires people to enter their account and password, it may affect the user experience.

IP Blocking and Rate Limiting

Monitor traffic in real time to promptly identify abnormal traffic consumption and suspicious robot behavior. For example, high-frequency access from specific IP addresses, uniform traffic patterns, and other such phenomena. By setting up IP banning and rate limiting, you can effectively block the bot.

You can add malicious robots to the IP blacklist. It means that requests from these IPs will be immediately blocked at the web firewall or proxy level, preventing further access..

A common strategy is to limit the number of requests an individual IP address can make within a specified time frame.

Additionally, you can block bots by setting request thresholds. For example, if an IP sends more than 100 requests in a minute, the web application server might block pop-ups (e.g., CAPTCHA or login challenges) from that IP.

Conclusion

Bots play a diverse role in the modern internet, improving automation efficiency but also posing security risks. Understanding the types of malicious bots and their potential threats, and implementing appropriate defense strategies, can effectively protect websites from attacks and data breaches. Regularly monitoring website traffic and adopting suitable security measures is key to ensuring the smooth and secure operation of websites and safeguarding user information.

Frequently asked questions

How do I stop bots from messaging me?

Use CAPTCHA or reCAPTCHA on forms to prevent bots from submitting them. For social media, adjust privacy settings and use bot-blocking tools provided by the platform (e.g., block suspicious accounts, report spam).

How can you tell if someone is using bots?

By analyzing website traffic and looking for abnormal patterns. When your website detects a large number of requests from the same IP address in a short period of time, you should be aware that you may be under attack by malicious robots.

What’s the difference between DDoS and crawler attacks?

A DDoS attack aims to overwhelm a website with a flood of fake requests, rendering it inaccessible, whereas a crawler attack involves scraping large amounts of data, consuming server resources.

How to stop bot traffic WordPress?

You can try using a security plugin like Wordfence or Sucuri to block malicious bots.

What are the legal consequences of bot attacks?

Malicious bot attacks like DDoS or data theft are illegal in many regions, and perpetrators may face legal action.

About the author

Clara is a passionate content manager with a strong interest and enthusiasm for information technology and the internet industry. She approaches her work with optimism and positivity, excelling at transforming complex technical concepts into clear, engaging, and accessible articles that help more people understand how technology is shaping the world.

The thordata Blog offers all its content in its original form and solely for informational intent. We do not offer any guarantees regarding the information found on the thordata Blog or any external sites that it may direct you to. It is essential that you seek legal counsel and thoroughly examine the specific terms of service of any website before engaging in any scraping endeavors, or obtain a scraping permit if required.